Welcome to “Handmade Hero Notes”, the book where we follow the footsteps of Handmade Hero in making the complete game from scratch, with no external libraries. If you'd like to follow along, preorder the game on handmadehero.org, and you will receive access to the GitHub repository, containing complete source code (tagged day-by-day) as well as a variety of other useful resources.

Last time, we mainly covered the theory: the basics of the platform API design. We talked about the ability of abstract your code away, so that it's not tied to the operative system. We talked about writing mainly code that's used across all the platforms the game ships on, while using only a small thin layer that allows the game to run on said platforms. Ultimately, the complexity and efficiency of such an API boils down to how well you define the boundary between the platform-specific and the platform-independent code.

We're now going to continue our transition towards platform-independent code that we started after we've gone through all that theory. We said that we want to support timing, user input, bitmap and sound buffer, and file data to come into our platform-independent layer, and we've only done the bitmap part so far. We also want to eventually allow the game to request file data on its side.

(Top)

0.1 Set the Stage for Today

0.2 Our Approach to API Design

1 Move Sound Across the API Boundary

1.1 Imagine Sound Use Case

1.2 Port Sine Wave Code

1.3 Start Compiling

1.4 Fix Compiler Errors

2 Use Platform-Specific Sounds

2.1 Update Win32FillSoundBuffer

2.2 Introduce Win32ClearBuffer

2.3 Increase Buffer Size

2.4 Expand Samples Buffer Size

2.5 Back to Feature Parity

3 Recap

4 Programming Notions

4.1 Alloca

5 Side Considerations

5.1 Update build.bat: Remove Error Message

5.2 On Temporality of Sound

5.3 Buffer Overruns

6 Navigation

Set the Stage for Today

We left our code at feature parity compared to where we started from. We draw the weird gradient the same way as before, if you've done the exercise we can even control its offset, and we can hear the tone.... Well, actually, we didn't touch this part. All the sound things still happen on the platform layer, and that's what we're going to focus on today.

There's a lot of subtlety involved in how you handle the time, and it's linked with the temporal nature of sound (more on this in subsection 5.2). Most games handle time poorly, with their game loops largely ignorant of what “advancing time” actually means. We'll try and get it correct from the get-go, albeit our model will be pretty simplistic at first (for instance, we might not always account for potential external interrupts happening from the system, or be otherwise as robust as we'd want to be... it will still be a good point to start from).

Our Approach to API Design

We'll start from looking at GameUpdateAndRender, state what we want to happen, and then move from there. Why not start from other way round, you might ask?

The design approach that we're going for is: Always write the usage code first when designing something like an API. If you need to define what the platform layer gives you, you don't want to do it upfront. You'd rather write the code that fakes using the thing a given API would provide in an ideal manner. You would then look at this usage case and try to implement something as close to it as you can. Any potential intricacies will remain “under the hood” until you can show in usage that you actually need them.

Always Write the Usage Code First.

Move Sound Across the API Boundary

Imagine Sound Use Case

Let's look at our newly-created handmade.cpp. Inside GameUpdateAndRender call, we can imagine that we want to output sound. As for the parameters for such a call, we can imagine something like this:

- Actual Samples (in memory): First of all, we want to specify a sound buffer to which we would have written the sound samples: this will be our second parameter.

- Sample count: Since we'll be writing a sound lasting a certain amount of time, we'll need to write a specific amount of sound samples. We don't know upfront how many however: this will depend on the time that we'll be receiving. This means that we'll need to pass the final sample count to the function.

- Sound Format: We don't really want to specify that so we'll skip it. The format we defined in the platform layer,interleaved stereo 16-bit samples, seems like a fine one to use by default for our purposes.

- Offset in time: This we'd want to make happen at some point. The offset would allow us to overwrite old samples or to skip ahead. We aren't going to that just yet, we want to start simple and game give us a continuos sample. So let's leave a TODO for now.

And... that seems like the whole of the call for now. This translates in the following code:

internal void

GameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset)

{ // TODO(casey): Allow sample offsets here for more robust platform options

GameOutputSound(SoundBuffer, SampleCountToOutput); RenderWeirdGradient(Buffer, XOffset, YOffset);

}Port Sine Wave Code

Of course, we don't have a function called GameOutputSound yet; Let's create one!

In win32_handmade.cpp, we only use the sound system to output the sine wave. The code responsible for sine wave sample generation is fairly straightforward:

s16 *SampleOut = (s16 *)Region1;

DWORD Region1SampleCount = Region1Size / SoundOutput->BytesPerSample;

for (DWORD SampleIndex = 0;

SampleIndex < Region1SampleCount;

++SampleIndex)

{

f32 SineValue = sinf(SoundOutput->tSine);

s16 SampleValue = (s16)(SineValue * SoundOutput->ToneVolume);

*SampleOut++ = SampleValue;

*SampleOut++ = SampleValue;

SoundOutput->tSine += 2.0f * Pi32 * 1.0f / (f32)SoundOutput->WavePeriod;

++SoundOutput->RunningSampleIndex;

}We can easily take this code and port it over to become cross-platform. Here's how we're going to approach it:

- We'll define function

GameOutputSoundinsidehandmade.cpp. As we said, it will take aSoundBuffer, which for now we can define to be somegame_sound_output_buffer *and anint SampleCountToOutput. Inside, we'll paste the code above. Don't eliminate the code from `win32_handmade.cpp`! We'll return to it in a minute.

internal void

GameOutputSound(game_sound_output_buffer *SoundBuffer, int SampleCountToOutput)

{

s16 *SampleOut = (s16 *)Region1;

DWORD Region1SampleCount = Region1Size / SoundOutput->BytesPerSample;

for (DWORD SampleIndex = 0;

SampleIndex < Region1SampleCount;

++SampleIndex)

{

// f32 SineValue = sinf(SoundOutput->tSine);

s16 SampleValue = (s16)(SineValue * SoundOutput->ToneVolume);

*SampleOut++ = SampleValue;

*SampleOut++ = SampleValue;

SoundOutput->tSine += 2.0f * Pi32 * 1.0f / (f32)SoundOutput->WavePeriod;

++SoundOutput->RunningSampleIndex;

}

}

internal void

GameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset)

{

GameOutputSound(SoundBuffer, SampleCountToOutput);

RenderWeirdGradient(Buffer, XOffset, YOffset);

}

- We need to make some changes to this code to make it cross-platform.

SampleOutwill be coming fromSoundBuffer.ToneVolumecan be defined as a constant variable.tSinecan belocal_persist(i.e.static) for now. We're going to pull it away shortly.SampleCountToOutputwill replaceRegion1SampleCount- We can also get rid of

SampleRunningIndex, since we don't care about it here.

This will result in the following function:

internal void

GameOutputSound(game_sound_output_buffer *SoundBuffer, int SampleCountToOutput)

{ local_persist f32 tSine;

s16 ToneVolume = 3000;

s16 *SampleOut = SoundBuffer->Samples; DWORD Region1SampleCount = Region1Size / SoundOutput->BytesPerSample; for (DWORD SampleIndex = 0; SampleIndex < SampleCountToOutput; ++SampleIndex)

{ f32 SineValue = sinf(tSine);

s16 SampleValue = (s16)(SineValue * ToneVolume);

*SampleOut++ = SampleValue;

*SampleOut++ = SampleValue;

tSine += 2.0f * Pi32 * 1.0f / (f32)SoundOutput->WavePeriod; ++SoundOutput->RunningSampleIndex; }

}

We still have that WavePeriod that we were getting from the SoundOutput structure. Now, if you think about it, wave period is referring to our test code of the sine wave. We were calculating it in the following manner:

SoundOutput.SamplesPerSecond = 48000;

//...

SoundOutput.ToneHz = 256;

// ...

SoundOutput.WavePeriod = SoundOutput.SamplesPerSecond / SoundOutput.ToneHz;

Now, we can safely bring over ToneHz in the same manner as we did with the ToneVolume. However, SamplesPerSecond is platform-specific code, it's being set and used for sound buffer initialization. And, because we don't know about SamplePerSecond in the platform-independent code, it should be a strong hint that the platform, by supplying us with the game_sound_output_buffer structure, should provide the samples per second value that we can use.

local_persist f32 tSine;

s16 ToneVolume = 3000;int ToneHz = 256;

int WavePeriod = SoundBuffer->SamplesPerSecond / ToneHz;

s16 *SampleOut = SoundBuffer->Samples;

for (DWORD SampleIndex = 0;

SampleIndex < SampleCountToOutput;

++SampleIndex)

{

f32 SineValue = sinf(tSine);

s16 SampleValue = (s16)(SineValue * ToneVolume);

*SampleOut++ = SampleValue;

*SampleOut++ = SampleValue; tSine += 2.0f * Pi32 * 1.0f / (f32)WavePeriod;}

Start Compiling

We can now start the compiling process, and let the compiling process guide you.

Alt-N in 4coder, and F8 if you use the build system in VSCode.

The first error that you're going to hit is the missing game_sound_output_buffer identifier (duh). Let's add it to handmade.h. We know exactly what needs to go in there: Samples and SamplesPerSecond:

struct game_offscreen_buffer

{

// ...

};

struct game_sound_output_buffer

{

int SamplesPerSecond;

s16* Samples;

};

On a second thought, we can make the API even simpler by also making SampleCountToOutput (which we can simply call SampleCount) part of game_sound_output_buffer:

struct game_sound_output_buffer

{

int SamplesPerSecond; int SampleCount; s16* Samples;

};internal voidGameOutputSound(game_sound_output_buffer *SoundBuffer){

// ...

for (int SampleIndex = 0; SampleIndex < SoundBuffer->SampleCount; ++SampleIndex)

{

// ...

}

}

internal void

GameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset)

{ GameOutputSound(SoundBuffer); RenderWeirdGradient(Buffer, XOffset, YOffset);

}

We can update our GameUpdateAndRender call to actually receive the sound output buffer from the system. We need to make changes in handmade.h and handmade.cpp; we'll get to the platform code in a second.

internal voidGameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset,

game_sound_output_buffer* SoundBuffer){

// ...

}internal void GameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset,

game_sound_output_buffer *SoundBuffer);

You can declare a function with a specific signature as many times and wherever in your code as you wish. But you only can define (give a body) to a specific function once.

Syntactically, function definition has the body (in curly braces {} with no semicolon) while the function declaration is immediately followed by semicolon ;, as you can see above.

Our next error is the missing sinf function, let's simply reorder the headers around:

// TODO(casey): Implement sine ourselves

#include <math.h>#include <stdint.h>

typedef // ...

#define internal static

#define local_persist static

#define global_variable static

#define Pi32 3.14159265359f

#include "handmade.cpp"

#include <stdio.h>

#include <xinput.h>

#include <dsound.h>

#include <windows.h>// TODO(casey): Implement sine ourselves

#include <math.h>Fix Compiler Errors

We finally arrive down to the platform layer. We've got the sound output buffer now which has to be filled by the game... and you'll notice that it's really not that big. Let's do a quick calculation.

We're aiming to have 60 frame-per-second framerate. Our sound buffer outputs 48000 samples per second, so let's make a quick calculation:

$$\frac{48000\ samples/second}{60\ frames/second} = 800\ samples/frame$$

We know that each sample is 2 bytes (16 bit) long, and there're 2 of them at a time, which results in \(800\times 2\times 2 = 3200 bytes\). That's really not a lot. Considering modern processors are capable of moving gigabytes of data per second, it's the most insignificant amount of data a modern processor has seen.

So what happens here is that we've elected to suffer an extra memory buffer copy as a cost of abstracting it out and presenting a cleaner interface of writing just to a single buffer, without having to think about the DirectSound's dual ring buffer. It's a tradeoff, but we choose to go for a more expensive and cleaner solution considering that some operating systems don't even have the ring buffer systems in first place! We can always return later and introduce the added complexity if it turns out we really need those extra cycles.

We'll be aiming to have a 30 fps sound buffer, because often we'll be locking the game to 30 frames per second for debug purposes. It's still only 6400 bytes, double of what we calculated above.

So, let's fill out the SoundBuffer to pass to our game:

SamplesPerSecond: We have it already, pass it as is.SampleCount: As we said, we want 1/30th of a second worth of samples per second. It's not final shipping code by any stretch, so simply calculating it on the spot should be fine.Samples: We'll cheese this part as well, simply allocate a large enough buffer on stack. We want \(\frac{48000\ samples}{30\ frames/second} \times 2\ channels\).

s16 Samples[(48000/30)*2];

game_sound_output_buffer SoundBuffer = {}; // clear to zero!

SoundBuffer.SamplesPerSecond = SoundOutput.SamplesPerSecond;

SoundBuffer.SampleCount = SoundBuffer.SamplesPerSecond / 30;

SoundBuffer.Samples = Samples;

game_offscreen_buffer Buffer = {};

//...

GameUpdateAndRender(&Buffer, XOffset, YOffset, &SoundBuffer);

We hope it goes without saying: don't try this at home in your shipping code! This is super janky and error prone, and we'll be replacing this code in a short while already. But! We're now compilable, and should be running as before.

Use Platform-Specific Sounds

Right now, we're still using the sine waves generated in the platform layer. The game layer is generating the sounds for the platform, but we aren't using those in any way. Let's fix this.

Update Win32FillSoundBuffer

We have Win32FillSoundBuffer function which is currently creating the sounds. We could adapt it so it would simply write whatever sounds it will receive from our game:

if (SUCCEEDED(GlobalSecondaryBuffer->GetCurrentPosition(&PlayCursor, &WriteCursor)))

{

// ... Win32FillSoundBuffer(&SoundOutput, ByteToLock, BytesToWrite, &SoundBuffer);}In here, we would simply take the “source samples” and write them to the “destination samples”. Simple copy, nothing else:

internal voidWin32FillSoundBuffer(win32_sound_output *SoundOutput, DWORD ByteToLock, DWORD BytesToWrite,

game_sound_output_buffer *SourceBuffer){

VOID *Region1;

DWORD Region1Size;

VOID *Region2;

DWORD Region2Size;

if(SUCCEEDED(GlobalSecondaryBuffer->Lock(ByteToLock, BytesToWrite,

&Region1, &Region1Size,

&Region2, &Region2Size,

0)))

{

// TODO(casey): assert that Region1Size/Region2Size are valid

DWORD Region1SampleCount = Region1Size / SoundOutput->BytesPerSample; s16 *SourceSample = SourceBuffer->Samples; s16 *DestSample = (s16 *)Region1; for (DWORD SampleIndex = 0;

SampleIndex < Region1SampleCount;

++SampleIndex)

{ f32 SineValue = sinf(SoundOutput->tSine);

s16 SampleValue = (s16)(SineValue * SoundOutput->ToneVolume); *DestSample++ = *SourceSample++;

*DestSample++ = *SourceSample++; SoundOutput->tSine += 2.0f * Pi32 * 1.0f / (f32)SoundOutput->WavePeriod; ++SoundOutput->RunningSampleIndex;

}

DestSample = (s16 *)Region2; DWORD Region2SampleCount = Region2Size / SoundOutput->BytesPerSample;

for (DWORD SampleIndex = 0;

SampleIndex < Region2SampleCount;

++SampleIndex)

{ f32 SineValue = sinf(SoundOutput->tSine);

s16 SampleValue = (s16)(SineValue * SoundOutput->ToneVolume); *DestSample++ = *SourceSample++;

*DestSample++ = *SourceSample++; SoundOutput->tSine += 2.0f * Pi32 * 1.0f / (f32)SoundOutput->WavePeriod; ++SoundOutput->RunningSampleIndex;

}

GlobalSecondaryBuffer->Unlock(Region1, Region1Size, Region2, Region2Size);

}

}Introduce Win32ClearBuffer

We also need to modify our startup code. The compiler will fail since we were calling Win32FillSoundBuffer to run the sine wave right after initializing DirectSound. That's fine, we could update it, except now we simply want to clear the whole buffer. So what we really want here is a function which simply flushes the buffer with 0s:

Win32InitDSound(Window, SoundOutput.SamplesPerSecond, SoundOutput.SecondaryBufferSize);Win32FillSoundBuffer(&SoundOutput, 0, (SoundOutput.LatencySampleCount * SoundOutput.BytesPerSample));Win32ClearBuffer(&SoundOutput);GlobalSecondaryBuffer->Play(0, 0, DSBPLAY_LOOPING);

As for implementing this function, it should be quite straightforward. First, we lock the secondary buffer for its entire length and unlock it at the end. We could copy the necessary code straight from Win32FillSoundBuffer.

internal void

Win32ClearBuffer(win32_sound_output *SoundOutput)

{

VOID *Region1;

DWORD Region1Size;

VOID *Region2;

DWORD Region2Size;

if(SUCCEEDED(GlobalSecondaryBuffer->Lock(0, SoundOutput->SecondaryBufferSize,

&Region1, &Region1Size,

&Region2, &Region2Size,

0)))

{

// Do the work

GlobalSecondaryBuffer->Unlock(Region1, Region1Size, Region2, Region2Size);

}

}internal void

Win32FillSoundBuffer(...)

{

// ...

}After we've locked our buffer, we'll take the entirety of the region 1 and fill it with zeroes. We don't even need to operate on 16-bit samples any more, we can simply go and clear bytes one by one.

u8 *DestSample = (u8 *)Region1;

for (DWORD ByteIndex = 0;

ByteIndex < Region1Size;

++ByteIndex)

{

*DestSample++ = 0;

}GlobalSecondaryBuffer->Unlock(Region1, Region1Size, Region2, Region2Size);We don't think we'll ever need to do anything with region 2 during startup, but just to be sure, let's clear it as well:

u8 *DestSample = (u8 *)Region1;

for (DWORD ByteIndex = 0;

ByteIndex < Region1Size;

++ByteIndex)

{

*DestSample++ = 0;

}DestSample = (u8 *)Region2;

for (DWORD ByteIndex = 0;

ByteIndex < Region2Size;

++ByteIndex)

{

*DestSample++ = 0;

}GlobalSecondaryBuffer->Unlock(Region1, Region1Size, Region2, Region2Size);

Since we're trying to do everything ourselves, we won't be using this function. We do encourage to try it out as an exercise for the reader.

Increase Buffer Size

We should be now compilable, but you will crash immediately upon running. This is due to the fact that we still use the full buffer length (or better, from where we stopped writing previously to the cursor position) to write the sound buffer, and not the 1/30th of a second that we pass as the source.

There are many ways to fix this issue. Ideally, we'd be changing the length of BytesToWrite at each frame to something corresponding to how much we actually want to write. We might also update the samples just before the play cursor. We'll do all these things given the time. For now however, let's do the simpler thing: output exactly the amount of samples the platform layer expects us to.

In order to do that, we face one particular issue: the code calculating BytesToWrite and outputting sound is below our GameUpdateAndRender call. This is fine for outputting sound, we want to write the samples first, and then release them to the operating system. But the code to calculate BytesToWrite we can simply put above our SoundBuffer definition. We'll use a boolean SoundIsValid to verify that everything went smoothly.

DWORD PlayCursor;

DWORD WriteCursor;

b32 SoundIsValid = false;

if (SUCCEEDED(GlobalSecondaryBuffer->GetCurrentPosition(&PlayCursor, &WriteCursor)))

{

DWORD ByteToLock = ((SoundOutput.RunningSampleIndex * SoundOutput.BytesPerSample)

% SoundOutput.SecondaryBufferSize);

DWORD TargetCursor = ((PlayCursor +

(SoundOutput.LatencySampleCount * SoundOutput.BytesPerSample))

% SoundOutput.SecondaryBufferSize);

DWORD BytesToWrite;

if(ByteToLock > TargetCursor)

{

BytesToWrite = SoundOutput.SecondaryBufferSize - ByteToLock;

BytesToWrite += TargetCursor;

}

else

{

BytesToWrite = TargetCursor - ByteToLock;

}

SoundIsValid = true;

}

s16 Samples[(48000/30)*2];

game_sound_output_buffer SoundBuffer = {};

SoundBuffer.SamplesPerSecond = SoundOutput.SamplesPerSecond;

SoundBuffer.SampleCount = SoundBuffer.SamplesPerSecond / 30;

SoundBuffer.Samples = Samples;

// Definition of the render buffer...

GameUpdateAndRender(&Buffer, XOffset, YOffset, &SoundBuffer);

DWORD PlayCursor;

DWORD WriteCursor;if (SoundIsValid){ DWORD ByteToLock = ((SoundOutput.RunningSampleIndex * SoundOutput.BytesPerSample)

% SoundOutput.SecondaryBufferSize);

DWORD TargetCursor = ((PlayCursor + (SoundOutput.LatencySampleCount * SoundOutput.BytesPerSample))

% SoundOutput.SecondaryBufferSize);

DWORD BytesToWrite;

if(ByteToLock > TargetCursor)

{

BytesToWrite = SoundOutput.SecondaryBufferSize - ByteToLock;

BytesToWrite += TargetCursor;

}

else

{

BytesToWrite = TargetCursor - ByteToLock;

}

Win32FillSoundBuffer(&SoundOutput, ByteToLock, BytesToWrite, &SoundBuffer);

}

We also want to extract higher the variables we define, such as ByteToLock, TargetCursor and BytesToWrite, so that they are accessible outside their scope. For instance, Win32FillSoundBuffer needs to see ByteToLock and BytesToWrite!

DWORD ByteToLock;

DWORD TargetCursor;

DWORD BytesToWrite;DWORD PlayCursor;

DWORD WriteCursor;

b32 SoundIsValid = false;

if (SUCCEEDED(GlobalSecondaryBuffer->GetCurrentPosition(&PlayCursor, &WriteCursor)))

{ ByteToLock = ((SoundOutput.RunningSampleIndex * SoundOutput.BytesPerSample)

% SoundOutput.SecondaryBufferSize);

TargetCursor = ((PlayCursor +

(SoundOutput.LatencySampleCount * SoundOutput.BytesPerSample))

% SoundOutput.SecondaryBufferSize); DWORD BytesToWrite; // ...

}

Now that we've completed this refactoring step, we finally know how many samples do we need our game to write. It's simply BytesToWrite divided by the BytesPerSample:

s16 Samples[(48000/30)*2];

game_sound_output_buffer SoundBuffer = {};

SoundBuffer.SamplesPerSecond = SoundOutput.SamplesPerSecond;SoundBuffer.SampleCount = BytesToWrite / SoundOutput.BytesPerSample;SoundBuffer.Samples = Samples;Expand Samples Buffer Size

This update creates another issue: we can no longer know in advance how big our Samples buffer should be. At this point we can simply say that the Samples is as big as our secondary buffer size is, and it will be used for however big SampleCount will tell.

It's worth to start doing buffer allocation in the right way so that, should buffer size change, we wouldn't crash and burn and have to update all the things we set manually all over again. To do that, we could use something like alloca to keep allocating on the program stack (more on this in subsection 4.1), or go ahead and use the already familiar VirtualAlloc to allocate on the heap (virtual memory). Let's do that, right after we initialize DirectSound:

Win32InitDSound(Window, SoundOutput.SamplesPerSecond, SoundOutput.SecondaryBufferSize);

Win32ClearBuffer(&SoundOutput);

GlobalSecondaryBuffer->Play(0, 0, DSBPLAY_LOOPING);s16 *Samples = (s16 *)VirtualAlloc(0, SoundOutput.SecondaryBufferSize,

MEM_RESERVE | MEM_COMMIT, PAGE_READWRITE);// ... s16 Samples[(48000/30)*2];game_sound_output_buffer SoundBuffer = {};

// ...

That's it! This should again result in working sound. However, this time you'll notice that we have no sinf inside win32_handmade.cpp: all the sound generation is happening in our cross-platform code!

Back to Feature Parity

Our refactoring went well, but we aren't at the feature parity yet! When we set off porting the sound generation to the game layer, we could control the pitch with the gamepad. Let's get this functionality back!

Since we've done so much work on sound already, let's make a quick and dirty job of it, and think of optimizing it better next time. We'll simply pass it to GameUpdateAndRender. Let's jump through the changes round!

First, update the call inside WinMain:

game_offscreen_buffer Buffer = {};

// ...

GameUpdateAndRender(&Buffer, XOffset, YOffset, &SoundBuffer, SoundOutput.ToneHz);

Next, update the function declaration inside handmade.h:

internal void GameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset, game_sound_output_buffer *SoundBuffer, int ToneHz);

Then, receive ToneHz inside the actual function definition and immediately send it to GameOutputSound:

internal void

GameUpdateAndRender(game_offscreen_buffer* Buffer, int XOffset, int YOffset, game_sound_output_buffer* SoundBuffer, int ToneHz){ GameOutputSound(SoundBuffer, ToneHz); RenderWeirdGradient(Buffer, XOffset, YOffset);

}

Last but not least, update GameOutputSound to actually use the tone!

internal voidGameOutputSound(game_sound_output_buffer *SoundBuffer, int ToneHz){

local_persist f32 tSine;

s16 ToneVolume = 3000; int ToneHz = 256; int WavePeriod = SoundBuffer->SamplesPerSecond / ToneHz;

// ...

}That's it!

Recap

Sound API is difficult to get right for cross-platform use, as any minor inconsistency is immediately noticeable. We'll be doing more tuning and improving on the sound quite soon so that it's not only applicable to the sound waves; in the meantime, we can consider the first cross-platform transition of our sound complete, so next time we can focus on other things. For now however, let's close out with a TODO to keep up as busy in the future:

DWORD ByteToLock;

DWORD TargetCursor;

DWORD BytesToWrite;

DWORD PlayCursor;

DWORD WriteCursor;

b32 SoundIsValid = false;// TODO(casey): Tighten up sound logic so that we know where we should be

// writing to and can anticipate the time spent in the game updates.if (SUCCEEDED(GlobalSecondaryBuffer->GetCurrentPosition(&PlayCursor, &WriteCursor)))

{

// ...

}Programming Notions

Alloca

alloca looks like something you'd find in a C Standard Library. However, it does not make part of the standard: many compilers simply include a function which allows to dynamically allocate some memory on the stack.

Why allocate on stack, as opposed to the heap? As with anything, there're several advantages and disadvantages to it.

Its advantages are:

- It's faster. Reserving a new page of virtual memory takes time since it's a kernel call; stack allocation are, for all intents and purposes, immediate.

- You don't need to think about freeing the memory. Once you go out of the scope of the function allocating the memory, the stack is popped back automatically, and the memory is freed. (Not the local scope, mind you! You can't run

allocainside a loop indefinitely!)

The downside is the following:

- Since allocation happens on stack, it's limited by the stack size. Stack is shared with all the functions parameters, local variables, etc., and once it's gone, it's gone. There's only Stack Overflow and the inevitable program crash waiting at the end.

For this reason, this is again one of those tools which might be useful for a quick task usually happening inside the debug code.

(Back to subsection 2.4)

Side Considerations

Update build.bat: Remove Error Message

Let's quickly tweak our build.bat file. Right now, if we try to compile, we're usually greeted with the following message:

A subdirectory or file build already exists.

This is because we try to create a build directory each time we run our build.bat! Let's fix this since batch scripting allows us to add some logic:

@echo off

if not exist build (mkdir build)pushd build

cl -DHANDMADE_WIN32=1 -FC -Zi ..\code\win32_handmade.cpp user32.lib gdi32.lib

popd

You can also write it in all caps, IF NOT EXIST. Both methods work fine.

On Temporality of Sound

We can't think about sound without thinking about time. The thing about sound is that it's temporal in nature. While video frames are prepared one at a time, and you can reason about each frame as a standalone painting, audio always happens over time. Therefore, in a game it's not optional for how long a sound would be playing for: the reproduction must be continuos.

Let's take an example. If you write sound for 1/16th of a second (62.5ms), and then play it for 1/8th of a second (125ms), the user will hear silence or noise for the second half of the period.

With sound, you're bound to stick with a specific sample speed or your brain will immediately pick up the error. It won't sound “slower” or “at a lower sample rate”. What you'll hear is a flat-out bug: clickiness, screeching, etc. You'll need to say: the sound will start at a given point in time, and you'll need a very specific amount of it.

Contrast this with video: if you put up an image on a screen, it can stay there for as long as you want. The only thing that will be perceived if you hold it for too long is that the perceived framerate will start to go down, and users might perceive the video as less smooth. That said, that single frame won't disappear or glitch out in some other way if it stays up a bit longer.

That said, video frames could also be bound by time if you use some advanced rendering techniques. Some motion blur may be calculated based on the amount of time that, you anticipate, that frame will be on screen. In that case the renderer needs to know exactly this time, to properly calculate the blur between the start and end points of all the entities, otherwise there might be teleporting glitches that the user might see in the frame after.

(Back to subsection 0.1)

Buffer Overruns

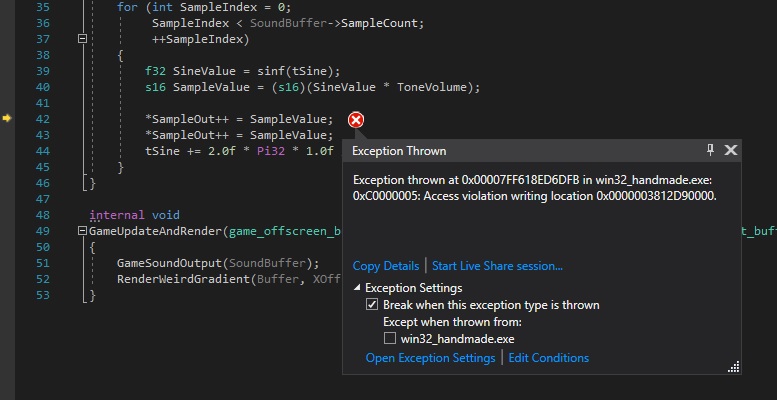

If you set a buffer to less than its intended size and then try to write to it, the program will compile just fine. However, when you try to run it, it will quickly crash. Depending on the debugger you're using, you might get a window pop-up, or simply a message in the Output window of the debugger, informing you of a “Buffer Overrun” or “Access violation writing location 0x....”

For instance, we can quickly get such an overrun if, instead of passing (48000/30)*2 as our Samples buffer size, we set it to simply (48000/30), i.e. half its intended size. If you compile and run, you'll see something similar in Visual Studio:

The reason for why the program halted is because the debugger inserts some checking code to see if you overwrote some memory somewhere. This is quite helpful, even if it doesn't happen all that frequently. Many people are afraid of pointers, because “They can corrupt memory!”, “They can overwrite some data” and so on. These concerns are legitimate but there're many ways to minimize the risks, and we'll introduce many of these in the future. Furthermore, if you operate in a safe environment such a debugger, there are even more safety nets already written for you, so you can find and fix all the potential errors during the development.

(Back to subsection 1.4)

Navigation

Previous: Day 11. The Basics of Platform API Design

- Buffer overrun